Social networks have made headlines for inadvertently providing a forum for those planning to take their own lives, with a number of people livestreaming their suicide attempts.

Identifying high-risk cases

Facebook has now taken the initiative to monitor its network actively for hints that someone may be contemplating to commit suicide. It has created an Artificial Intelligence (AI) algorithm that looks for typical patterns in social media posts that may mark out a potential suicide.

“The AI is actually more accurate than the reports that we get from people that are flagged as suicide and self-injury,” Facebook Product Manager Vanessa Callison-Burch said in an interview with BuzzFeed News.

When the algorithm spots worrying patterns, it flags them to community managers. The community managers can then further investigate and reach out proactively to users it believes to be at high risk. In cases it considers less urgent, it says that it will prompt friends to contact the person concerned with a pre-written message to make it easier to start a conversation.

Online counselling

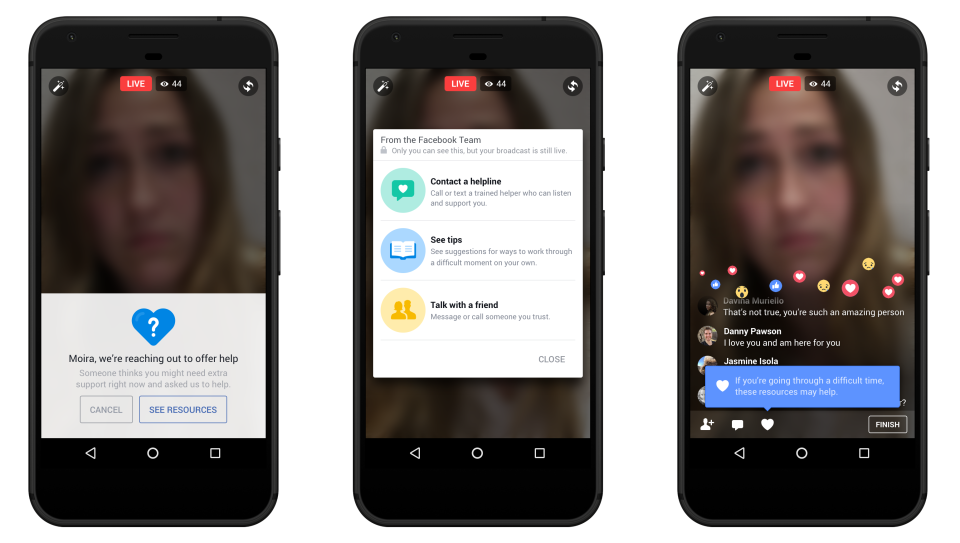

To tackle the live video streaming of suicide attempts, Facebook has also launched a new tool for friends to report videos that concern them. Once notified, Facebook will make prevention resources available on screen so the Facebook Live broadcaster can live-chat to a counselling line, for example. The company has partnered with a number of counselling and self-help organisations to provide support. In addition, resources will also be offered to the person who reported the video so they can intervene as well.

Facebook has faced criticism that it has opted to let Live videos continue to run, which the company has said it does in order to give friends or family a chance to intervene.

How other digital platforms react

If you search ‘how to commit suicide’ on Google, the search engine flags up the Samaritan’s helpline, along with ads for a number of other support organisations. Quora prefaces questions about suicide with a list of support lines to contact. Yet, both sites still bring up links to suicide ‘manuals.’

Apple’s Siri, in contrast, suggests that you might want to speak to a suicide prevention centre, and serves up a list of prevention resources.

As AI and machine learning advance, they offer the potential to detect and support those in trouble before it is too late.